We know when solving linear algebra equations, despite its abstract syntax, LinearSolve is much faster compared to Solve:

n = 1000;

m = DiagonalMatrix@RandomReal[{1, 2}, n];

b = RandomReal[1, n];

sol1 = LinearSolve[m, b]; // AbsoluteTiming

x = Table[Unique[], {n}];

sol2 = Solve[m.x == b, x]; // AbsoluteTiming

Last /@ First@sol2 == sol1

{0.124800, Null} {1.216800, Null} True

I wonder if NRoots owns such a counterpart, too?

…To be honest, I came across this problem because a friend of mine claimed that a matlab function roots() is one order of magnitude faster than NRoots[]. The following is the comparison between NRoots and roots.

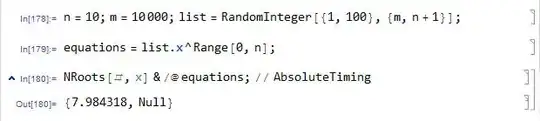

NRoots in Mathematica 9.0.1:

n = 10; m = 10000; list = RandomInteger[{1, 100}, {m, n + 1}];

equations = list.x^Range[0, n];

NRoots[#, x] & /@ equations; // AbsoluteTiming

{7.984318, Null}

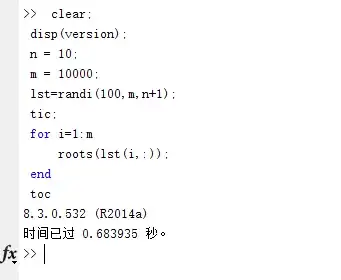

roots in Matlab R2014a:

clear;clc;

n = 10;

m = 10000;

lst=randi(100,m,n+1);

tic;

for i=1:m

roots(lst(i,:));

end

toc

Elapsed time is 0.756003 seconds.

Given the performance difference between

Given the performance difference between LinearSolve and Solve, I believe if the analysis for the structure of polynomial is eliminated, Mathematica will have the same speed.

If such an internal function doesn't exist, can we have a self-made one that eliminates the preprocessing? Anyway, though I almost don't know the syntax of Matlab, the definition of roots() seems to be simple so I think it won't be that hard to implement the same algorithm in Mathematica.

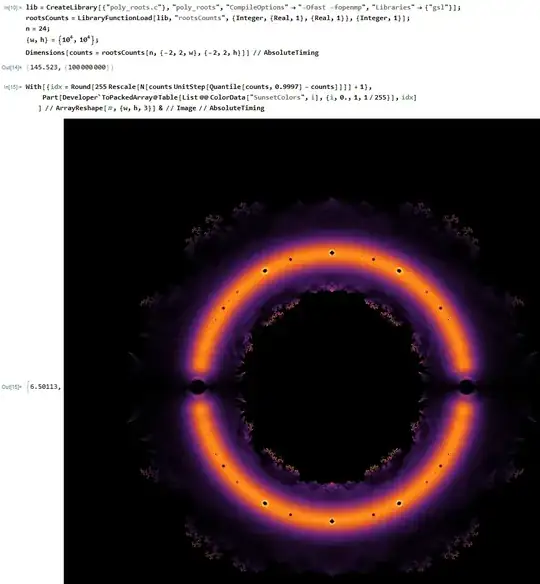

OK, since the target of this question hasn't been completely achieved (NRoots owns 3 methods, currently only "CompanionMatrix" is implemented), let me add something to try to draw more attention. You remember this?:

It's said that Sam Derbyshire spent 4 days for a similar one, while the above image only takes me no more than 3 minutes in my old 2GHz laptop with roots[] that chyaong showed in his answer below:

(* I modified chyaong's roots[] a little for this specific task. *)

modifiedroots[c_List] :=

Block[{a = DiagonalMatrix[ConstantArray[1., Length@c - 1], -1]},

a[[1]] = -c;

Eigenvalues[a]];

s = 1000;

l = ConstantArray[0., {s + 1, s + 1}];

l[[#, #2]] += 1. & @@@

Round[1 + s Rescale@

Function[z, {Im@z, Re@z}, Listable]@

Flatten[modifiedroots /@ Tuples[{-1., 1.}, 18]]]; // AbsoluteTiming

ArrayPlot[UnitStep[80 - l] l, ColorFunction -> "AvocadoColors"]

{161.737000, Null}

Let's ignore the fact that my image is only degree 19.

This is not the end, according to my simple test (I simply add different Methods to the 2nd sample of this post), the "Aberth" method is probably more efficient than the CompanionMatrix inside NRoots, so if it's implemented in an abstract form, the above image will likely to be done in a even shorter time!

NRootstakes aMethodoption. The three documented possibilities are"Aberth","JenkinsTraub", and"CompanionMatrix". (I do not know if there are undocumented possibilities). From what I could see, the third of those possibilities should be similar to whatroots()does. – Daniel Lichtblau Feb 05 '15 at 16:16Solve/LinearSolve, unlessNRootsplays the role ofLinearSolvewithRootsbeing the "more general" function (insofar as it handles exact and symbolic input). I suppose one could go from the coefficient list and directly form a companion matrix and invokeEigenvalues. Would that be faster or as reliable? I don't know. – Daniel Lichtblau Feb 05 '15 at 16:18NRootsthat could have impact on performance vs. quality?" As I noted in a comment, the analogy toSolve/LinearSolveis just not clear to me. A bigger issue, in my mind, is that there is no clear example whereNRootsis seen to perform relatively badly as compared toroots(). – Daniel Lichtblau Feb 06 '15 at 16:45list.x^Range[0,n]==x^Range[0,n].Transpose@list– chyanog Feb 09 '15 at 09:26list.x^Range[0, n]is simper, but Amita has chosen the latter and take the screenshot, so let it be, anyway it's not the main issue here :) – xzczd Feb 09 '15 at 09:33NRootsin v10.0.2 is more than 4 times as fast as that in v9.0.1. Theroots[]in my answer has similar performance asroots()of matlab. Well, that's interesting. – xzczd Feb 10 '15 at 10:48